AI Observability Platform

Take control of GenAI applications in production with a powerful AI observability platform. Track real-time metrics, token usage, and model costs. Gain deep tracing to troubleshoot faster and improve reliability.

Trusted by innovators from top companies

Key features

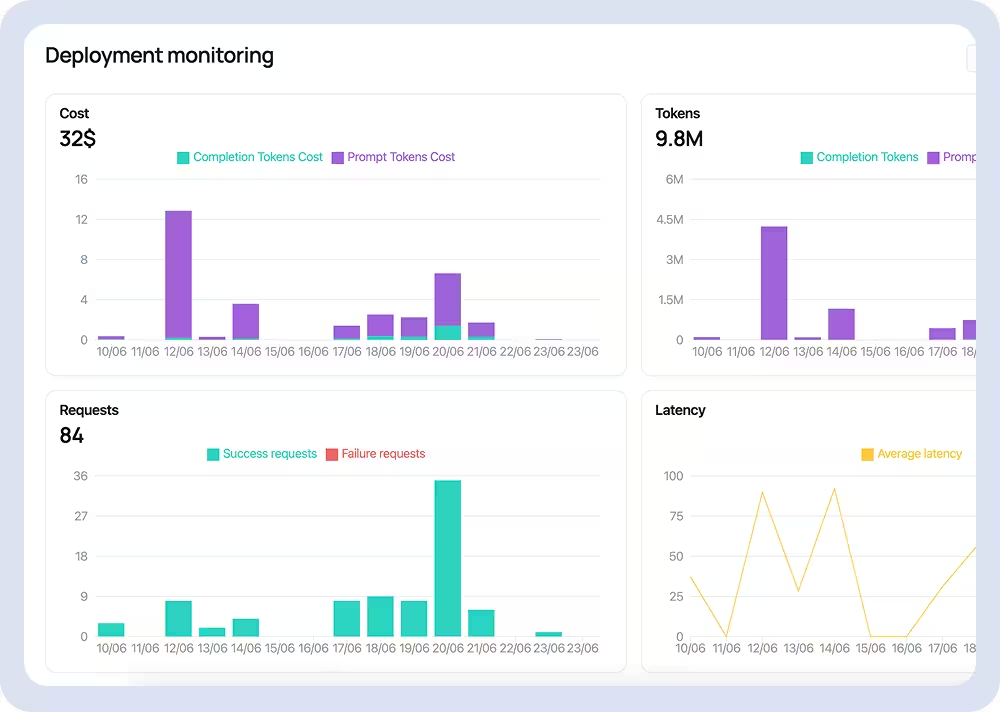

Live Monitoring Dashboard

- Monitor real-time GenAI activity, including request count, token usage, and system health

- Detect performance issues instantly through a unified visual dashboard

Cost and Latency Tracking

- Track request costs and response times across models, providers, and environments

- Forecast spending and optimize usage with real-time, actionable metrics

Deep Tracing & Request Logs

- View complete request and response traces to debug faster and reduce downtime

- Analyze agent behavior, edge cases, and bottlenecks to improve reliability

How it works

1. Build Your Workflow

Use Dynamiq’s low-code tools to create your GenAI pipeline or agent system

2. Deploy Seamlessly

Launch in your preferred environment — cloud, on-prem, or hybrid

.avif)

3. Monitor in Real Time

Instantly access observability insights: view traces, costs, token logs, and performance metrics

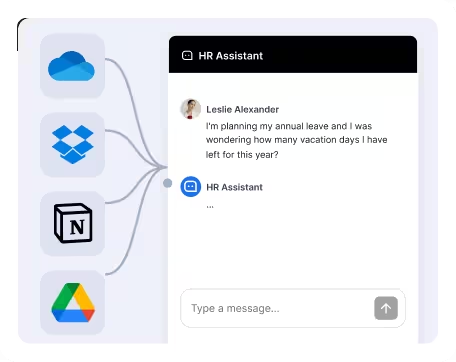

Integrate with your favourite tools

An end-to-end platform to manage the full GenAI application lifecycle

AI workflow builder

Easily orchestrate multiple AI agents to handle complex workflows.

Deployment

Utilize seamless deployment options done in a few clicks.

Guardrails

Ensure reliability and full control over your GenAI applications.

Observability and evaluations 1

Highest quality evaluations and monitoring at scale.

Chat with leading LLMs

Chat with any LLM from a single interface to find the best LLM for your use case.

Knowledge & RAG

Centralize, enhance and unlock the full potential of your data.

Fine-tuning

Fine-tune any LLM on your proprietary data.

FAQ

What is an AI observability platform?

How is observability different from evaluations?

Why do I need observability for GenAI apps?

What can I track in Dynamiq’s observability dashboard?

Is this secure and enterprise-ready?

Start building your GenAI use cases

Curious to find out how Dynamiq can help you extract ROI and boost productivity in your organization? Let's chat.